| Taesung Park12 Jun-Yan Zhu23 Oliver Wang2 Jingwan Lu2 Eli Shechtman2 Alexei A. Efros12 Richard Zhang2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

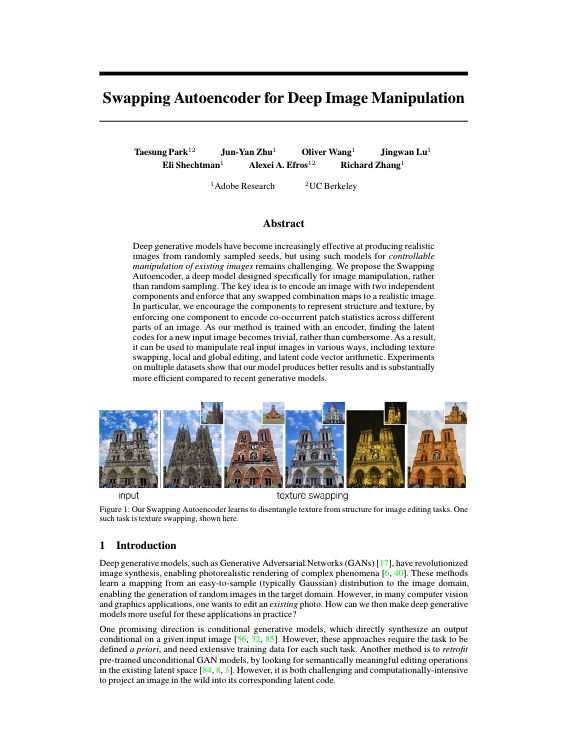

T. Park, J.Y. Zhu, O. Wang, J. Lu, E. Shechtman, A. A. Efros, R. Zhang. Swapping Autoencoder for Deep Image Manipulation. NeurIPS, 2020. |

Acknowledgements |